We are experiencing a new Promethean moment: Just as Prometheus giving fire to humanity transformed civilization, recent technological advancements, like AI, genomics, or quantum computing, promise to transform how business and society work.

Technological advancements bring great benefits to society—often exceeding the anticipations of the developers. For example, laser beams were developed in the 1950s for sending signals, but soon found widespread use in such diverse fields as precision measurement, surgery, spectroscopy, and reading optical disks. The benefits of new technologies, however, are not always evenly distributed across society. This has been true for centuries[1]For details, see Daron Acemoglu and Simon Johnson’s historical review of technology in Power and Progress – or listen to our podcast on the book. and remains so today: In just three years, mRNA vaccines saved millions of lives and led to the award of a Nobel Prize. Yet the radically unequal distribution of the Covid-19 vaccine created much-discussed inequalities both within and among nations.

Technological progress almost always comes with unintended consequences: Nuclear fission can be used for devastating warfare, and social media has alleged adverse effects on the mental health of adolescents. The next wave of technologies has several characteristics that may amplify the magnitude of these negative externalities.[2]For reference, see Mustafa Suleyman’s The Coming Wave – or listen to our podcast with the author.

First, benefits and risks have been posited as being asymmetrical. One viable quantum computer could render the world’s encryption infrastructure redundant; one AI program could write as much text as could the entirety of humanity. Second, these technologies are inherently dual-use. AI tools are designing entirely new proteins, which could transform medicine—but the same tools could also be used to create pandemic-level pathogens as bioweapons. Third, they are potentially autonomous. AI is already making recruiting or lending decisions independently of human oversight—processes that have been criticized for perpetuating historical biases from the datasets on which these models are trained.

As a result of these potential side effects—but also of the potential massive benefits of these technologies—the pressure to design appropriate regulations is higher than ever. Historically, society has relied on top-down, slow moving and static regulation to cultivate the benefits and mitigate the externalities of modern technologies. However, this traditional model may be insufficient for keeping up with the speed, complexity, and power of the next wave of technologies. Moreover, increased social polarization and geopolitical strife may put the democratic systems we use for developing these top-down rules under threat.

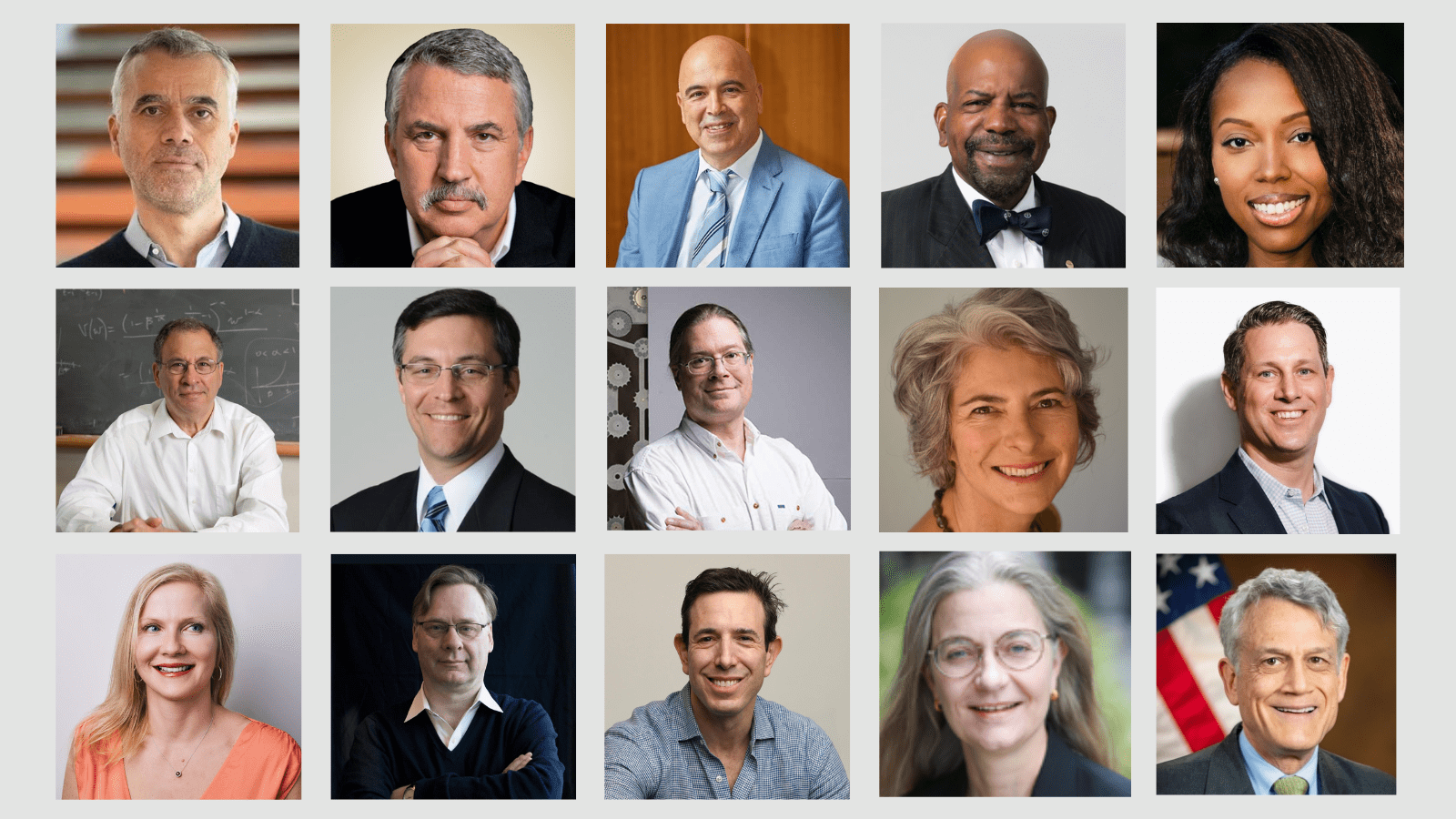

To learn more about the challenges of regulating currently ascending technologies, and to explore novel regulatory approaches, we assembled a group of thought leaders from academia, business, and government. Unsurprisingly, there was no consensus conclusion; but below, we summarize the collection of the diverse perspectives that emerged from the discussion.

The participants included:

- Theodoros Evgeniou, professor of decision sciences and technology management at INSEAD

- Thomas L. Friedman, columnist at the New York Times and three-time Pulitzer Prize winner

- Konstantinos Karachalios, former managing director of the Institute of Electrical and Electronics Engineers (IEEE) Standards Association and member of the IEEE Management Council

- Cato T. Laurencin, University Professor of the University of Connecticut and chief executive officer, the Cato T. Laurencin Institute on Regenerative Engineering

- Nandi O. Leslie, Principal technical fellow at RTX and lecturer and research advisor in computational mathematics and data science at Johns Hopkins University

- Simon Levin, James S. McDonnell Distinguished University Professor in Ecology and Evolutionary Biology, Princeton University

- Mark T. Maybury, vice president of commercialization, engineering, and technology at Lockheed Martin

- Cristopher Moore, professor at the Santa Fe Institute

- Deirdre K. Mulligan, principal deputy US chief technology officer at the White House Office of Science and Technology Policy and professor in the School of Information at UC Berkeley

- Michael Nally, CEO of Generate:Biomedicines and CEO/partner at Flagship Pioneering

- Frida Polli, founder of Alethia, and previously CEO and co-founder of Pymetrics

- Martin Reeves, Managing director and senior partner at BCG and chair of the BCG Henderson Institute

- Bradley Tusk, co-founder and managing partner of Tusk Venture Partners

- Jane K. Winn, professor in the Center for Advanced Study and Research on Innovation Policy at the University of Washington

- Peter A. Winn, acting chief privacy and civil liberties officer of the U.S. Department of Justice

Challenges of technology regulation

There are several perennial challenges in regulating technologies. For one, there is regulatory lag, as technologies typically develop more quickly than the frameworks designed to govern them. By the time regulations are drafted and implemented, the technology may have moved on, rendering the regulations outdated or ineffective.

Moreover, the complexity, novelty, and ambiguity of emerging technologies can be difficult for policymakers, or even experts, to understand—making it challenging to predict the impact of regulatory interventions.

Finally, regulation itself may have side effects: By attempting to curb technologies, regulation may limit innovation and the associated benefits. For example, it may create barriers to entry for startups, divert incumbents’ resources from R&D to compliance, or limit interoperability due to incompatible frameworks across countries. These trade-offs are often exacerbated by the monolithic nature of traditional regulation. Different fields of application may have very different risks and benefits for a new general-purpose technology.

However, today’s emerging technologies exhibit several properties that make them particularly difficult to manage with traditional approaches to regulation. First, regulatory lag may be exacerbated by the great rate of progress. As an illustration, consider that the computational power involved in training AI models (measured in floating point operations, or FLOPs) has roughly doubled every six months since 2010.

Second, these technologies and the associated risks transcend national borders. For example, a single pathogenic experiment could spark a global pandemic. Effective regulation of such technologies requires international coordination and alignment across diverse regulatory regimes, which further exacerbates regulatory lag.

Third, these technologies can be opaque, even to experts. For example, the behaviors of large language models emerge from a training process, rather than being specified by programmers. As a result, the mechanisms that underpin their responses are not known, even to their creators, and scientists evaluating them have described the task as similar to investigating an “alien intelligence.”

Fourth, due to the asymmetric nature of these technologies, power can be concentrated with the few players possessing the appropriate knowledge and resources. Regulating such powerhouse corporations will likely prove challenging. Moreover, an asymmetric distribution of talent between corporations and the public sector exacerbates the issue.

Fifth, industry and academia have become increasingly intertwined, with many innovations stemming from research funded by private companies. This phenomenon can undermine academic independence and integrity, which regulators have relied on as a neutral voice.

Further complicating the above properties, today’s technological advancements are occurring in the context of increased pressure on the democratic systems relied upon to regulate them. Many democracies around the world are under threat, and societies are increasingly polarized. Digital technologies may aggravate these issues. Geopolitical turbulence—with several states engaged in open warfare or trade tensions—exacerbate the trade-offs associated with regulating technological progress, which may also impact national security and economic competitiveness.

Novel approaches to technology regulation

Based on a more nuanced understanding of the unique challenges of regulating emerging technologies, our discussion unearthed several innovative approaches, including enhancements of the top-down legislation and interventions, proactive bottom-up involvement by industry, and, finally, creating adaptive coalitions across involved parties.

Enhancements of top-down legislation and government interventions

Governments could play a critical role in legislation and through targeted interventions—like funding AI research, conducting antitrust investigations, and taking domain-specific actions in areas like healthcare and financial services. Policy makers and regulators could consider several concepts and methods in designing solutions:

Risk-based approaches. Rather than applying uniform, command-and-control rules, regulators could consider risk-based frameworks that tailor compliance requirements in line with the magnitude and likelihood of risks. For example, in anti-money laundering efforts, the Financial Action Task Force advocates for a risk-based approach, whereby banks conduct enhanced due diligence procedures for high-risk customers, such as politically exposed persons, while applying simplified measures for lower-risk customers.

With modern technologies, like AI or gene editing, regulators could devise a similar approach to assess the magnitude and likelihood of risk outcomes (such as user reach, training data scale, or model sophistication) and then apply nuanced regulatory requirements accordingly. Such smart, deaveraged regulations could alleviate the regulatory burden on small, innovative firms (typically with smaller risk profiles), allowing governance to evolve in line with firms’ sizes, and thus their potential impact.

Using technology to extend regulatory capacity and effectiveness. While there is significant hype and uncertainty around the full potential of AI, early research from MIT indicates it can substantially boost highly skilled workers’ productivity. The private sector is already hard at work putting this to use, and public agencies could follow suit. For example, AI appears to have significant potential to enhance the rate of drug discovery. To deal with the ensuing increase in workload, the Federal Drug Administration (FDA) could assess how to leverage AI to evaluate new drugs. One proposal to consider is to set up a specialized AI service—akin to the U.S. Digital Service (USDS) —to advise and support federal agencies looking to use AI to improve government services and for executing on policymaking, regulatory, and enforcement duties.

Accessible infrastructure. An important antidote to the concentration of research and development capabilities with a few market-leaders is to provide public research infrastructure. For example, the National Artificial Intelligence Research Resource Task Force has developed a roadmap to bring together “computational resources, data, testbeds, algorithms, software, services, networks, and expertise” to empower researchers. Google has recommended funding public–private partnerships to build and maintain high-quality datasets for AI research. An important model of this is CERN: In 1954, an intergovernmental organization was established that built and operates the largest particle physics laboratory in the world. This has contributed to groundbreaking discoveries like the Higgs boson.

International coordination. Corporations and technologies cross borders. Therefore, many of the regulatory challenges are global, and different authorities are likely to default to different approaches. As such, enhanced coordination across governments may be required. In a promising first step, at the AI Safety Summit in November 2023, 28 countries (including the US, UK, and China) signed the Bletchley declaration, resolving “to support an internationally inclusive network of scientific research on frontier AI safety” and “to sustain global dialogue.” But governments might need to go beyond such “soft” agreements toward harmonized technical standards, an international treaty, or a new international agency.

Bottom-up approaches led by industry

Companies might consider a more proactive approach to self-regulation, for several reasons. First, it is a crucial step in collaborating with governments to shape controls and regulations that are appropriate and effective, for example, by ensuring that they are not monolithic, but tailored to each sector. From the government’s perspective, this approach offers the benefit of increased ownership from industry, which potentially speeds up implementation and improves compliance. Second, companies failing to consider negative externalities risk causing a “tragedy of the commons,” which can potentially degrade the very ecosystem that facilitates their success. Third, actively engaging in a coalition of peers promotes cooperation, reducing the risk of free riding, and promotes sharing of best practices.

Key steps for industry players to consider include:

Industry standards. While these new technological waves will likely impact all sectors, the implications will be industry specific. Businesses could organize within industries to define standards, guidelines, and voluntary norms (for example, on interoperability, security, ethics, and data privacy), as well as to monitor and enforce compliance.

For example, in the early days of online airline reservation systems, concerns arose about competitive fairness, especially given the dominance of systems like American Airlines’ SABRE and United’s Apollo. The International Air Transport Association (IATA) played a vital role in standardizing display options and search results, promoting a more competitive and equitable environment for all airlines.

More recently, more than 100 biotech scientists have signed a list of values, principles, and commitments governing the responsible use of AI for protein design. Leading AI developers Anthropic, Google, Microsoft, and OpenAI have formed the Frontier Model Forum, an industry body focused on ensuring safe and responsible development of frontier AI models.

Adaptive governance. Specific technologies, such as AI models, will likely change rapidly. As a result, businesses may need to shift from classical, slow cycle, planning-based governance to an approach that focuses on adaptivity: This could mean relying on guiding principles, which can inform specific rulesets that are regularly updated. It can also involve experimenting with new policies and creating strong selection mechanisms to scale up what works.

As an example, Google currently pursues a five-pronged approach to govern AI, including the formulation of deliberately broad principles that will remain relevant in a fast-changing environment, and a central team dedicated to reviewing the ethics of new AI research and technologies in light of these principles.

Ethical guidelines. Numerous professions have a long history of ethical codes, like the Hippocratic Oath in medicine or the American Bar Association’s Model Rules of Professional Conduct in law. Similarly, corporations could define and adopt industry-wide guidelines for the ethical development and deployment of these technologies.

One ethical principle could be to require human accountability for AI actions. For example, at Wikipedia, automated editing processes conducted by AI bots are crucial to maintaining the encyclopedia. Wikipedia upholds a policy stating that the operator of a bot is responsible for the repair of any damage caused by a bot that operates incorrectly. In a more challenging context, the Pentagon requires “appropriate levels of human judgement” over the use of autonomous weapon systems.

Safety or ethical principles could also be incorporated into the design and development phases of a product or service. For example, nuclear facilities are designed with inherent safety features such as a passive cooling system that functions without human intervention or electrical power, ensuring safety in case of system failures. In this vein, since 2016, the IEEE has been developing standards and tools for human-centric, values-based design of new AI systems and for quality assessment of existing systems according to such criteria. Similarly, engineers could program formal guidelines into AI systems to avoid violating agreed values. OpenAI is actively working on this problem: GPT-4 is 82% less likely than GPT-3.5 to respond to requests for disallowed content such as hate speech or code for malware.

Adaptive coalitions across parties

Top-down regulation and bottom-up initiatives alone may be insufficient. On the one hand, companies not aligned with top-down regulations could look for workarounds. For example, New York City’s law to audit AI tools involved in hiring decisions—which was established to counteract perpetuation of biases—has been substantially undermined due to a loophole. On the other hand, bottom-up initiatives can also be ineffective: Without a centralized authority, enforcing regulations can be inconsistent and fragmented. Further, these initiatives can be more susceptible to influence by dominant industry players who might steer regulations to favor their interests over the public good.

One critical component of the solution to regulating emerging technologies is coordination across all parties. One source of inspiration is Nobel Laureate Elinor Ostrom’s framework of polycentric governance: an adaptive coalition of multiple parties (such as industry, academia, and government) operating autonomously at different scales and levels, cooperating through shared values and norms to make and enforce rules within a specific policy area. This approach is well-suited for complex contexts with multiple authorities and scales, where centralized control is ineffective. How can polycentric, adaptive coalitions be built? Our discussion surfaced some initial ideas.[3]We also refer the reader to a recent proposition by New America’s Complexity and International Relations Study Group, which proposed a coalition-style regulation approach to AI, consisting of a … Continue reading

Identify and engage stakeholders. Identify and engage a diverse set of participants to address technical knowledge gaps, such as technologists, policymakers, academia, industry leaders, and civil society. For example, in 2020 the Israel Ministry of Transport (MoT) adopted an innovative process in developing a regulatory framework for the commercial deployment of autonomous vehicles. The MoT engaged a wide range of stakeholders, including industry leaders like Cruise, Waymo, and Zoox, academics, and non-profits. They also formed cross-ministerial partnerships, sharing best practices with other local and global regulators.

Coalitions can also take the form of grassroots movements. For example, researchers like Jonathan Haidt have tried to engage parents and educators in the effort to reduce the negative impact of social media on children’s mental health by calling for campaigns to reduce mobile phone use at school. In the UK, recently initiated campaigns already involve more than 75,000 parents and have led to engagement with the Prime Minister’s office to discuss these issues.

Create an emergent consensus. Coordination across parties under polycentric governance is achieved through shared values, ethics, and objectives. But this type of coordination requires much more work than simply publishing a shared set of principles. Consensus is painstakingly built through structured participatory processes and iterative dialogue. The management of the internet’s infrastructure is a notable example of polycentric governance, involving multiple stakeholders such as governments, private companies, non-profits, and individuals, operating under shared principles like openness, inclusivity, and decentralization. Different governing bodies, such as ICANN, IETF, and IEEE, facilitate working groups, public comment periods, annual meetings, and online forums to build consensus.

Develop a virtuous learning loop. All parties could collaborate to develop a virtuous learning loop to refine standards and regulations. As part of this, industry players could collect and share data on the effects and effectiveness of regulation with regulators and academia for assessment and iteration. For example, cybersecurity guidance regularly contains hundreds of instructions, without fully accounting for the vast variance in cost-benefit impact across them. A recent Cloudflare study found that a pragmatic approach—implementing just a small subset of these instructions—can be effective.

To support a learning loop, regulators could also deliberately set up tools that facilitate agile collaboration with industry. A successful example is the UK Financial Conduct Authority’s “regulatory sandbox,” which enables fintechs to test new products in a controlled setting under regulatory oversight and without incurring the normal regulatory consequences—accelerating time to market at potentially lower cost.

Finally, the evolution of regulations in the finance sector—from Bretton Woods in 1944 to Basel IV in 2023—is a salient lesson in reflecting on significant incidents, collaborating internationally across industry and regulatory stakeholders, and improving governance over time.

Regulating the next wave of technology will continue to be challenging. But in the words of Sundar Pichai, CEO of Alphabet and Google, “AI is too important not to regulate—and too important not to regulate well.”

Looking forward, technology has the potential to dramatically transform our lives. Influential CEOs in AI development such as Sam Altman, Dario Amodei, and Jensen Huang believe human-level AI will likely be developed in the next five years, which could radically alter the labor market. Experts in genomic medicine argue that many, if not most, “variants of uncertain significance” in coding regions will be resolved by 2030, which could usher in a new era of precision genomic medicine. IBM predicts that by 2033 it will deliver highly capable quantum supercomputers, supercharging technological progress across a wide range of industries.

Not all predictions will come to pass, but the world will certainly look very different, and regulation must evolve accordingly. The diverse set of emerging solution elements raised at our discussion provide a helpful starting point for governing technology. We are in the midst of a new Promethean moment: It’s now time to begin the arduous, but important, journey of governing the new era of technology for the benefit of all.